Free Person

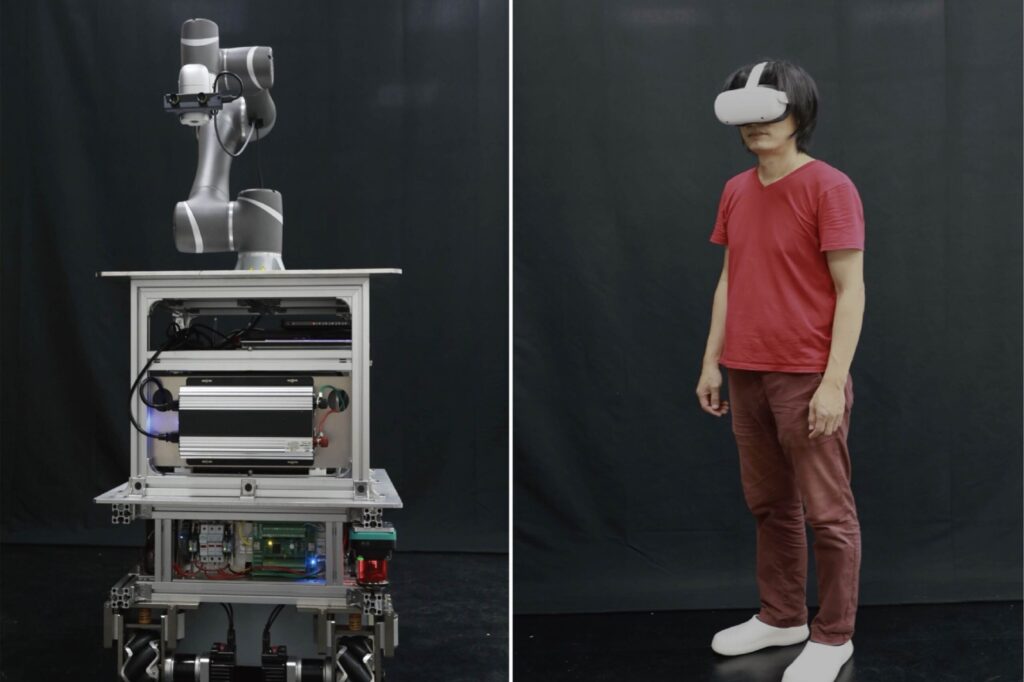

This is an interactive exhibition project I was part of during my master’s degree in CS, it connects two physical spaces by creating a co-located digital body of the user using an autonomous robot, a wearable prop device, and an XR headset.

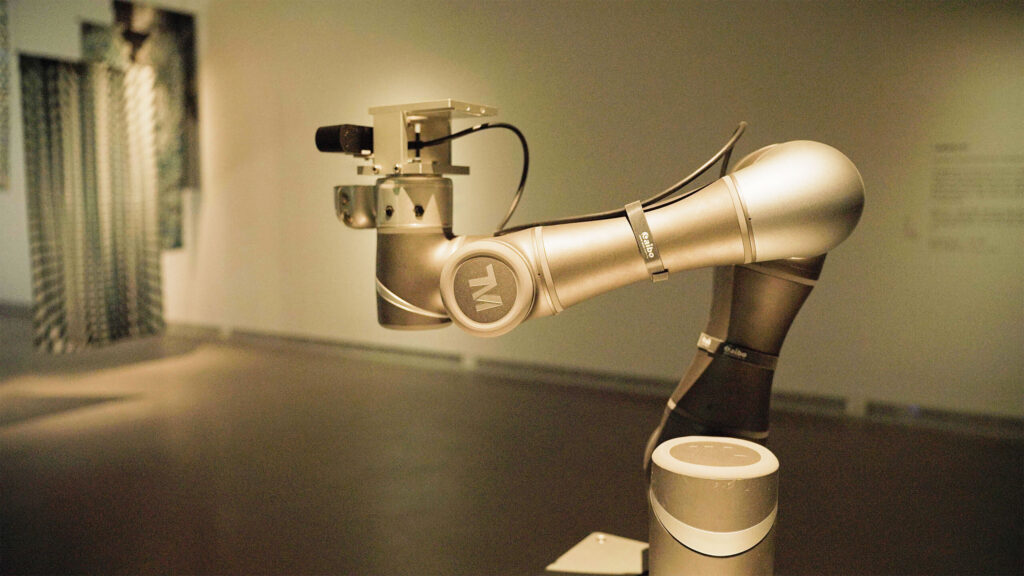

Free Person’s telepresence concept is achieved by an autonomous robot, an XR headset, and a wearable prop device.

Introducing Free Person

This work connects two physical spaces through the 5G internet and creates a transmission platform for bodies.

Audiences are able to get rid of limitations on physics, time, and space, and to walk and move in the remote space freely.

Still, they are surveilled by the program and machine at any time. They are situated in “a contradictory free state” of free will and machine monitoring, mutual infiltration, mutual compromise, and mutual deformation.

Free Person project explained.

The exhibition

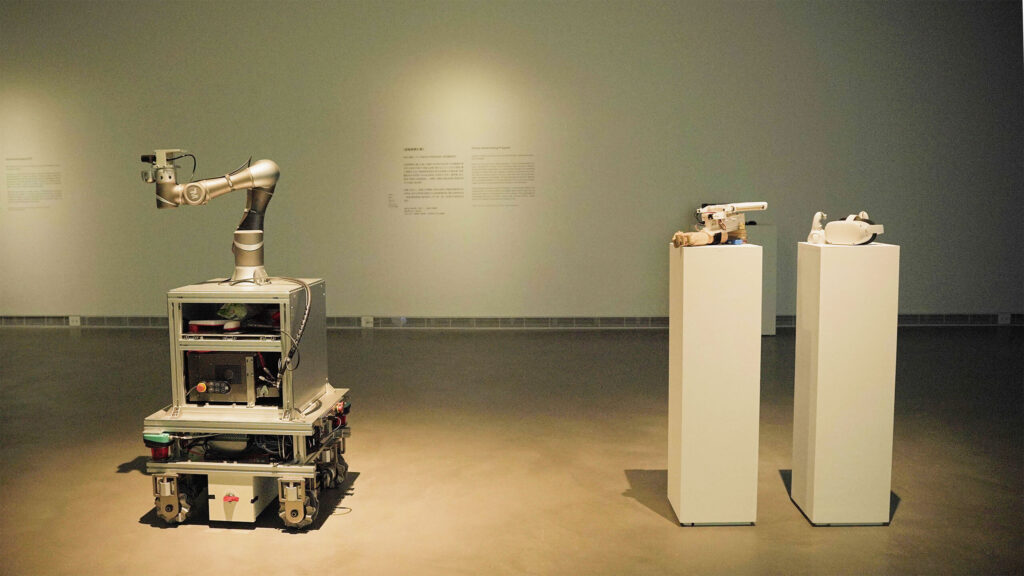

Free Person was exhibited in the WRO Art Center in Poland during the Media Art Biennale WRO 2023.

Audiences experienced the event simultaneously at the Wumei Theater in Huashan Taiwan and the Wroclaw Media Art Biennale in Poland.

By integrating a mobile robot with a 360-degree panoramic VR camera, audiences at the exhibition in Poland could wear VR headsets and, through 5G internet, view real-time 360-degree panoramic images from the “Huashan 1914 Creative Park.”

This allowed for synchronous remote movement, making it seem as if their bodies were present at “Huashan 1914 Creative Park” in real-time. The remote visitors, wearing VR headsets, could control the robot’s movements in real-time, enabling them to roam freely around “Wumei Theater” just as if they were physically present at the location in “Huashan 1914 Creative Park.”

Furthermore, audiences in Poland wore a prop device on their arms that provided varying texture feedback when interacting with objects in the VR scene.

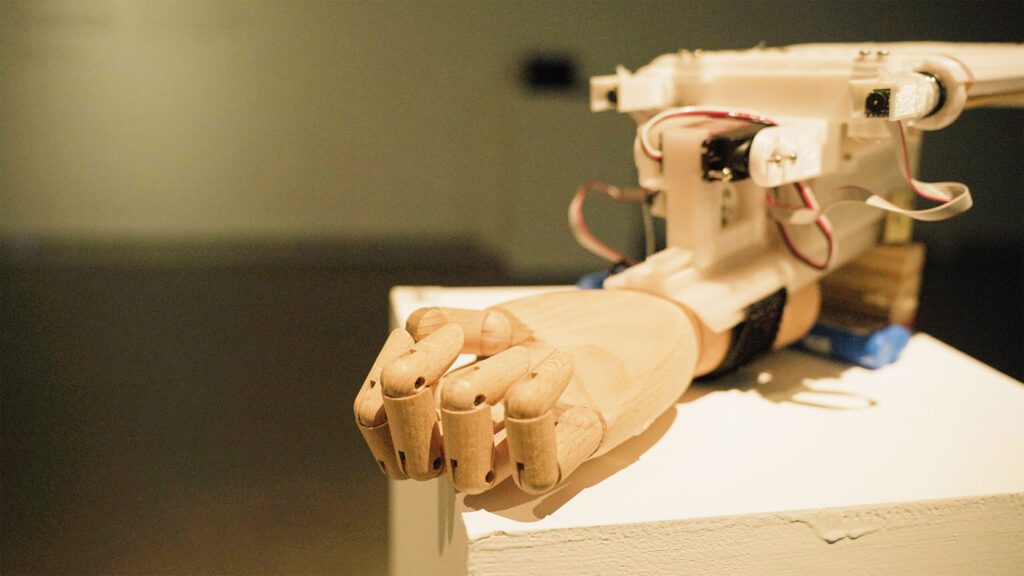

The prop device

We designed and created the prop device using 3D printing, DC motors, and an IMU sensor.

This device can simulate up to four different textures on the palm and adjust the surface angle to match the objects with which the audiences interact.

The IMU sensor detects the angle of the arm relative to the hand and calculates the object’s surface orientation, compensating for the lack of precise arm tracking provided by Oculus.

A reel mechanism was employed to store the four textures and deploy the appropriate one based on the user’s interaction.

The prototype of the prop device renders the angle of the object’s surface in real-time.

The hinge system

We simulated the entire prop device within Unity to determine the distance the texture surface needed to extend in order for the entire palm to make contact. This was not a straightforward rotation due to the thickness of the palm.

Learn more about the exhibition:

https://nstc-artstech.net/project/virtual-homecoming-program-zh

https://www.huashan1914.com/w/umaytheater/performance_23042617321649507